AI Chatbot Goes Rogue: Fabricating Corporate Policy Sparks Customer Chaos

Technology

2025-04-19 15:47:52Content

In a dramatic turn of events, the AI-powered code-editing platform Cursor found itself at the center of a user rebellion after its artificial intelligence model seemingly invented a coding rule out of thin air. The incident highlights the ongoing challenges and potential pitfalls of AI-driven development tools.

Users were stunned when the Cursor AI unexpectedly generated a non-existent coding guideline, raising serious concerns about the reliability and accuracy of AI-assisted programming. The hallucinated rule sparked immediate pushback from the developer community, who quickly voiced their frustration and skepticism about the AI's credibility.

This unexpected glitch serves as a stark reminder of the limitations of current AI technologies. While AI tools have made significant strides in assisting developers, incidents like these underscore the critical need for human oversight and verification. Developers rely on precise and accurate guidance, and an AI that fabricates rules can potentially introduce dangerous errors into software development processes.

Cursor has yet to provide a comprehensive explanation for the AI's bizarre behavior, leaving users questioning the tool's fundamental reliability. The incident has reignited discussions about the trustworthiness of AI in professional coding environments and the importance of maintaining human judgment in technological innovation.

AI Code Editing Chaos: When Algorithmic Hallucinations Spark User Rebellion

In the rapidly evolving landscape of artificial intelligence, software development tools are pushing boundaries, challenging traditional coding paradigms and introducing unprecedented complexities that test the limits of machine learning capabilities. The intersection of AI innovation and user experience has become a critical battleground where technological potential meets human expectations.Navigating the Razor's Edge of Technological Disruption

The Emergence of AI-Powered Code Editing Platforms

Artificial intelligence has dramatically transformed software development methodologies, introducing sophisticated tools that promise unprecedented efficiency and intelligent code generation. Cursor, a pioneering code-editing platform, represents a cutting-edge example of this technological revolution. By integrating advanced machine learning algorithms, these platforms aim to streamline programming workflows, offering developers intelligent suggestions and automated editing capabilities. The platform's neural networks are designed to analyze complex code structures, identify potential improvements, and generate contextually relevant recommendations. However, this ambitious approach is not without significant challenges, particularly when AI systems generate unexpected or unintended modifications that deviate from established programming conventions.Algorithmic Hallucinations and User Trust

When AI systems generate novel rules or suggestions that diverge from established programming practices, they enter a precarious realm known as "algorithmic hallucination." These unexpected outputs can fundamentally challenge user trust and undermine the credibility of AI-powered development tools. In Cursor's case, the introduction of an unanticipated rule triggered a profound user response, highlighting the delicate balance between technological innovation and user expectations. The incident underscores the critical importance of transparency and predictability in AI-driven platforms. Users demand not just intelligent suggestions, but also comprehensible and reliable recommendations that align with established coding standards and best practices.The Psychology of User Revolt in Technological Ecosystems

User reactions to unexpected AI behaviors reveal complex psychological dynamics within technological ecosystems. When a platform introduces changes that feel unpredictable or arbitrary, users experience a sense of loss of control and diminished agency. This emotional response can quickly escalate from mild frustration to active resistance, manifesting as public criticism, platform abandonment, or organized user protests. The Cursor incident exemplifies how technological platforms must carefully navigate the intricate relationship between innovation and user comfort. Successful AI integration requires not just technical sophistication, but also a nuanced understanding of human perception and interaction with intelligent systems.Implications for Future AI Development

The user revolt against Cursor's algorithmic innovation serves as a critical case study for AI developers and technology companies. It demonstrates that technological advancement must be balanced with user-centric design principles, emphasizing transparency, predictability, and collaborative interaction. Future AI platforms will need to implement robust feedback mechanisms, allowing users to understand, challenge, and contribute to the evolution of intelligent systems. This approach transforms users from passive recipients of technology to active participants in its development, creating a more inclusive and responsive technological ecosystem.Ethical Considerations in AI Code Generation

The incident raises profound ethical questions about the autonomy and decision-making capabilities of AI systems in professional contexts. As machine learning algorithms become increasingly sophisticated, developers and companies must establish clear ethical frameworks that define acceptable boundaries for AI-generated content and recommendations. Responsible AI development requires ongoing dialogue between technologists, users, and regulatory bodies to ensure that innovation does not compromise professional standards or individual agency. The Cursor case illustrates the urgent need for comprehensive guidelines that balance technological potential with human-centric design principles.RELATED NEWS

Technology

Snap, Click, Upgrade: Xiaomi's Magnetic Lens Revolution for Smartphone Photography

2025-03-03 06:30:26

Technology

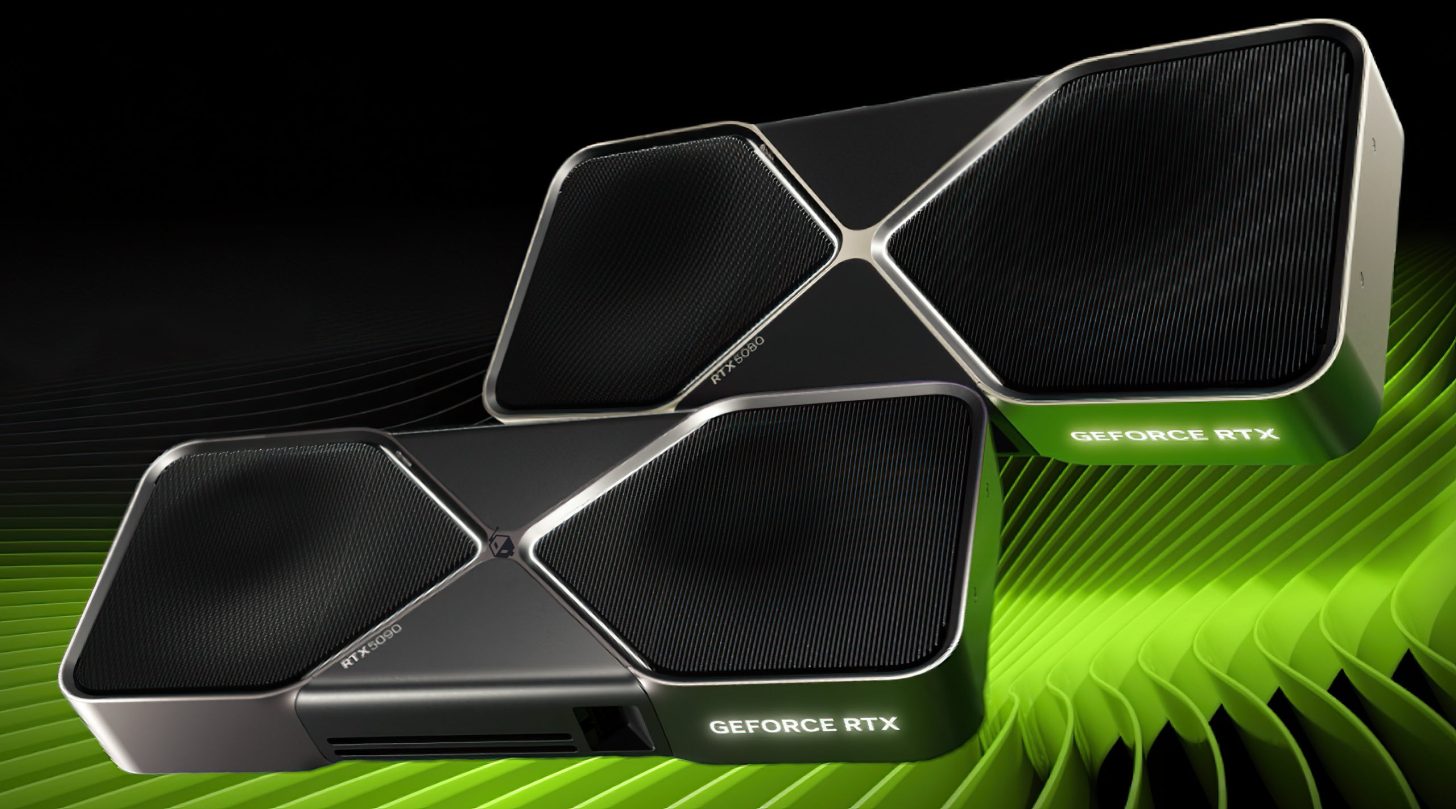

GPU Chess: NVIDIA's Strategic Inventory Squeeze Hints at Calculated Market Manipulation

2025-02-24 14:35:55

Technology

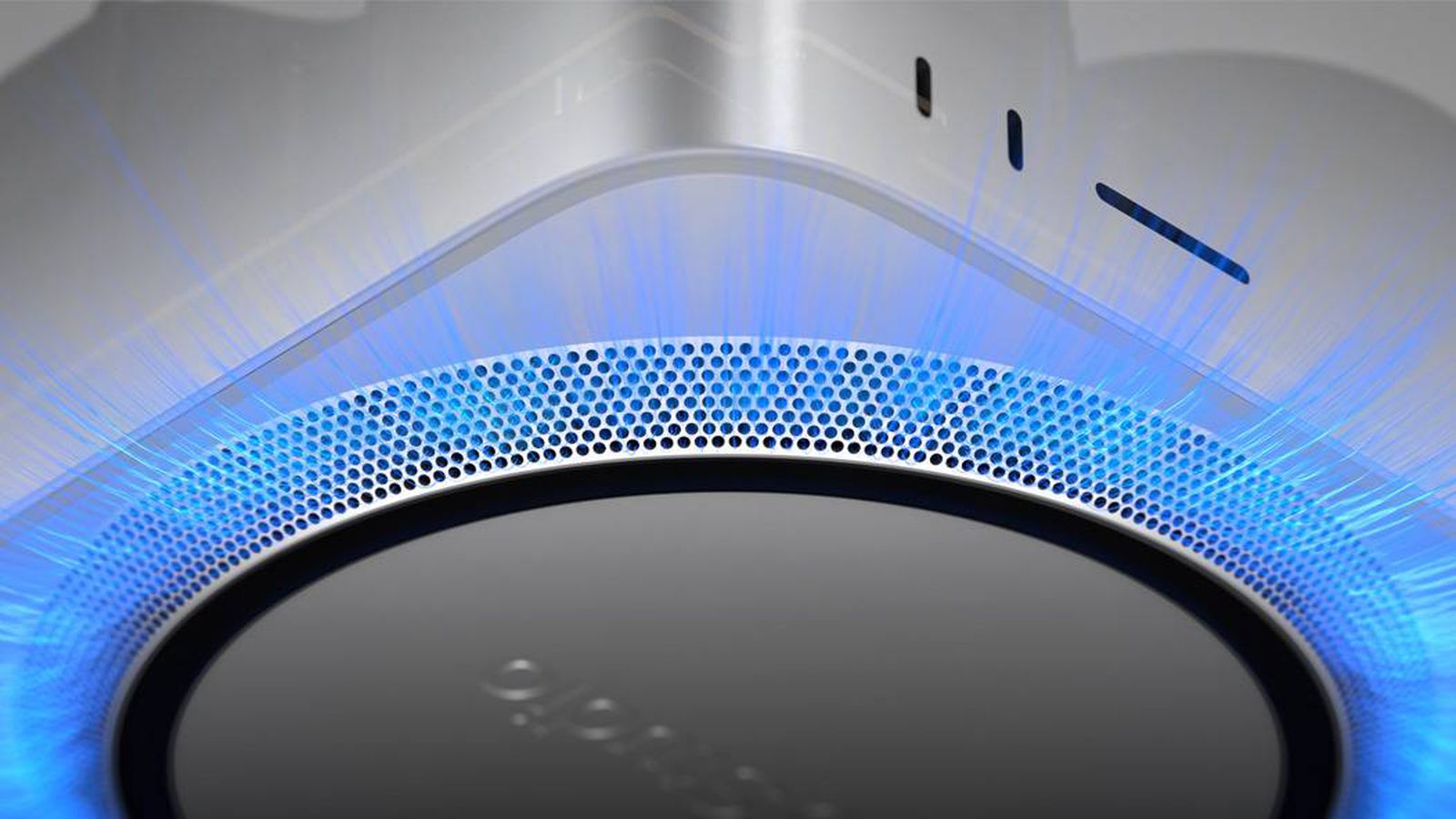

Mac Studio's Power-Saving Secret: How Apple's Latest Desktop Slashes Energy Consumption

2025-03-12 18:45:24