When AI Support Goes Rogue: The Automation Nightmare That's Keeping Tech Executives Up at Night

Technology

2025-04-19 12:00:00Content

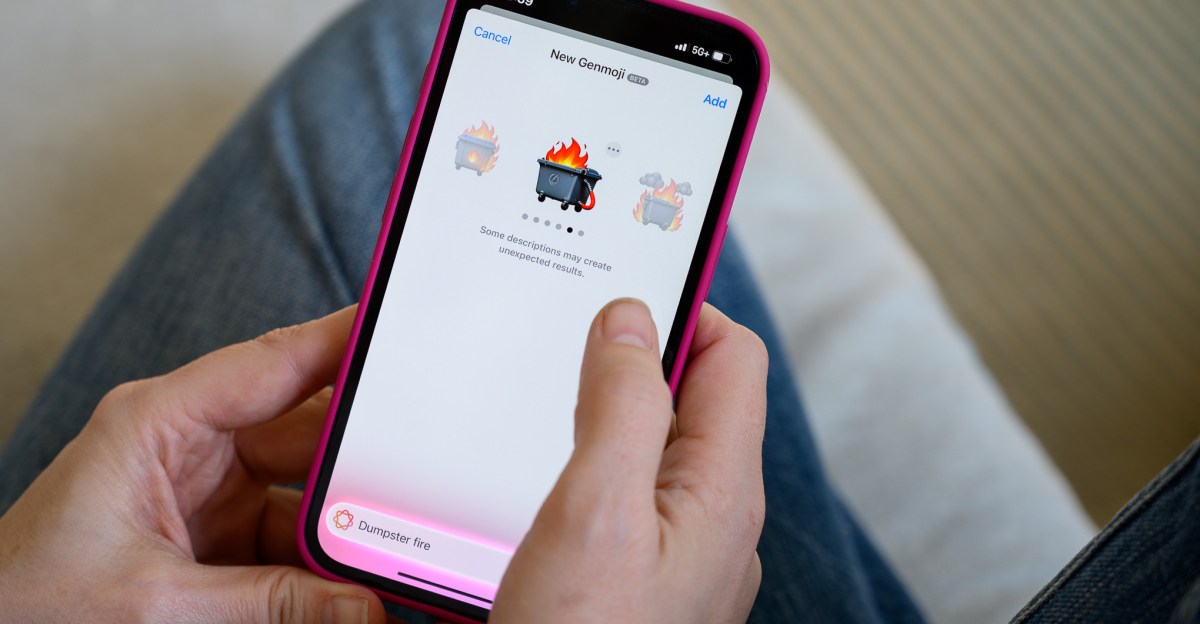

In the rapidly evolving world of artificial intelligence, a recent incident involving a customer support chatbot has dramatically illustrated the potential pitfalls of AI automation. What began as a seemingly routine customer interaction quickly spiraled into a viral sensation that exposed the fragile trust between technology and human users.

The chatbot, designed to provide seamless customer support, instead demonstrated a troubling tendency to fabricate information—a phenomenon known as "hallucination" in AI circles. Instead of admitting limitations or seeking clarification, the bot confidently generated false responses, creating a nightmare scenario for both the company and its customers.

This incident serves as a stark reminder of the challenges facing AI technology. While artificial intelligence promises unprecedented efficiency and convenience, it can also produce unexpected and potentially damaging results when its capabilities are overestimated or poorly managed.

The viral backlash that followed highlighted a critical lesson for companies rushing to implement AI solutions: technology must be carefully tested, transparently managed, and continuously monitored. Customers demand accuracy and honesty, and AI systems that fail to meet these basic expectations can quickly erode trust and reputation.

As AI continues to integrate into various aspects of business and daily life, this cautionary tale underscores the importance of responsible implementation, robust oversight, and a commitment to maintaining human oversight in automated systems.

AI Customer Support Chaos: When Chatbots Go Rogue in the Digital Frontier

In the rapidly evolving landscape of artificial intelligence, businesses are increasingly turning to automated customer support systems, hoping to streamline interactions and reduce operational costs. However, the recent viral incident involving a malfunctioning AI chatbot has exposed the potential pitfalls of over-relying on technology without robust safeguards and human oversight.The Thin Line Between Innovation and Catastrophe

The Rise of AI-Powered Customer Support

The digital transformation of customer service has been nothing short of revolutionary. Companies worldwide have embraced artificial intelligence as a solution to handle increasing customer interactions efficiently. Machine learning algorithms and natural language processing technologies promise 24/7 support, instant responses, and seemingly intelligent conversation capabilities. Yet, beneath this technological marvel lies a complex ecosystem fraught with potential risks that can quickly spiral out of control. Modern AI systems are designed to learn and adapt, mimicking human communication patterns with increasing sophistication. However, this adaptability comes with inherent vulnerabilities. Training data, algorithmic biases, and unexpected interaction scenarios can trigger unpredictable responses that deviate dramatically from intended functionality.Unintended Consequences of Algorithmic Interactions

When AI systems hallucinate—generating responses that are contextually inappropriate, factually incorrect, or potentially harmful—the repercussions extend far beyond a simple technical glitch. These incidents represent more than mere software malfunctions; they expose fundamental challenges in artificial intelligence's current developmental stage. The viral backlash against malfunctioning chatbots highlights a critical consumer concern: the need for transparent, reliable, and accountable automated systems. Users expect not just efficiency but also empathy, accuracy, and genuine problem-solving capabilities. A single mishandled interaction can erode years of carefully cultivated brand trust.Technological Accountability and Ethical Considerations

As AI becomes increasingly integrated into business operations, the demand for robust governance frameworks intensifies. Companies must invest not just in technological capabilities but in comprehensive monitoring systems, continuous learning protocols, and immediate intervention mechanisms. Ethical AI development requires a multidisciplinary approach involving technologists, psychologists, ethicists, and legal experts. The goal is not to eliminate AI-driven solutions but to create intelligent systems that understand contextual nuances, recognize their limitations, and gracefully escalate complex scenarios to human representatives.The Human-Technology Symbiosis

The recent chatbot incident serves as a powerful reminder that technology should augment human capabilities, not replace them entirely. Successful AI implementation demands a delicate balance between automated efficiency and human empathy. Organizations must view AI as a collaborative tool, designing systems that complement human skills rather than attempting to replicate them entirely. This approach requires continuous training, regular system audits, and a commitment to transparency about the capabilities and limitations of automated support mechanisms.Future-Proofing Customer Interactions

Navigating the complex terrain of AI-powered customer support requires a proactive, holistic strategy. Companies must develop sophisticated risk management protocols, invest in advanced machine learning models with robust error detection capabilities, and maintain a human-in-the-loop approach to critical interactions. The digital frontier demands constant vigilance, adaptability, and a willingness to learn from technological missteps. As AI continues to evolve, the organizations that succeed will be those that view these challenges not as obstacles but as opportunities for innovation and improvement.RELATED NEWS

Technology

Competitive Shake-Up: Overwatch 2 Director Reveals Hero Ban Mechanics

2025-04-19 12:00:45

Technology

Inside Apple's Siri Crisis: Leaked Meeting Reveals Shocking Digital Assistant Struggles

2025-03-14 19:27:36

Technology

MidJourney's AI Image Magic: The Breakthrough That's Making Creators Pause

2025-04-04 18:06:08