Tech Titans Clash: Microsoft Exposes Developers Exploiting AI Toolkit

Technology

2025-02-27 21:45:02Content

Microsoft has escalated its legal battle against deepfake creators by updating its lawsuit to specifically identify four individuals allegedly responsible for misusing the company's AI technology to generate unauthorized celebrity impersonations.

In a significant legal maneuver, the tech giant has now named the specific defendants who are accused of exploiting Microsoft's AI models to create sophisticated and potentially harmful digital replicas of well-known personalities. This amendment to the original lawsuit provides more precise details about the individuals behind these controversial deepfake productions.

The updated legal filing represents Microsoft's proactive stance in protecting intellectual property rights and preventing the potential misuse of advanced artificial intelligence technologies. By directly naming the defendants, Microsoft signals its commitment to holding individuals accountable for inappropriate and potentially damaging uses of AI-generated content.

While specific details about the defendants remain limited, the lawsuit underscores the growing concerns surrounding deepfake technology and its potential for creating misleading or harmful digital representations of real people.

Tech Titans Unleash Legal Fury: Microsoft's Deepfake Lawsuit Exposes Digital Impersonation Epidemic

In the rapidly evolving landscape of artificial intelligence, technology giants are increasingly confronting unprecedented challenges that blur the lines between innovation and ethical boundaries. Microsoft's latest legal maneuver represents a critical moment in the ongoing battle against digital misrepresentation, signaling a watershed moment for intellectual property rights and personal digital integrity.Unmasking the Digital Deception: When AI Crosses the Line

The Rising Threat of Synthetic Media Manipulation

The digital ecosystem has become a breeding ground for increasingly sophisticated forms of synthetic media manipulation. Microsoft's lawsuit represents more than a mere legal dispute; it's a profound statement about the urgent need to establish robust legal frameworks that can protect individuals from unauthorized digital impersonation. Advanced AI technologies have created unprecedented opportunities for malicious actors to generate hyper-realistic content that can damage reputations, violate personal rights, and undermine trust in digital communication platforms. Technological advancements have exponentially increased the potential for creating convincing deepfakes, rendering traditional methods of digital verification increasingly obsolete. The ability to generate near-perfect replicas of individuals' likenesses using machine learning algorithms has transformed from a theoretical concern to a tangible threat affecting celebrities, public figures, and everyday individuals alike.Legal Strategies in the Age of Artificial Intelligence

Microsoft's strategic approach to combating unauthorized AI model exploitation demonstrates a proactive stance in protecting intellectual property and establishing legal precedents. By amending the original lawsuit to specifically name the four defendants allegedly responsible for misusing AI models, the tech giant is sending a clear message about the consequences of unethical technological manipulation. The legal action highlights the complex intersection between technological innovation and ethical boundaries. Each defendant represents a potential case study in how AI technologies can be weaponized to create harmful, unauthorized synthetic media. The lawsuit serves not just as a punitive measure but as a deterrent for potential future misuse of advanced machine learning technologies.Technological and Ethical Implications of Deepfake Creation

The proliferation of deepfake technologies raises profound questions about consent, digital identity, and the fundamental rights of individuals in an increasingly digital world. Microsoft's lawsuit illuminates the critical need for comprehensive legal frameworks that can adapt to rapidly evolving technological landscapes. Synthetic media creation technologies have advanced to a point where distinguishing between authentic and fabricated content has become increasingly challenging. This blurring of lines poses significant risks across multiple domains, including entertainment, journalism, personal reputation management, and national security. The potential for malicious actors to exploit these technologies demands a multifaceted approach involving technological innovation, legal intervention, and ethical guidelines.Global Perspectives on Digital Impersonation

The Microsoft lawsuit transcends individual corporate interests, representing a global conversation about digital rights and technological accountability. As artificial intelligence continues to evolve at an unprecedented pace, international legal systems must develop sophisticated mechanisms to address emerging challenges in synthetic media creation. Different jurisdictions are approaching this complex issue with varying degrees of urgency and comprehensiveness. Some nations are developing stringent regulations to combat digital impersonation, while others are still grappling with the fundamental technological and legal challenges posed by advanced AI systems. Microsoft's legal action could potentially serve as a catalyst for more comprehensive global discussions about digital ethics and technological governance.Future Implications and Technological Safeguards

As artificial intelligence continues to advance, the development of robust technological safeguards becomes increasingly critical. Machine learning algorithms must be designed with inherent ethical constraints that prevent unauthorized and malicious usage. This requires collaborative efforts between technology companies, legal experts, ethicists, and policymakers. The ongoing evolution of deepfake detection technologies represents a promising avenue for mitigating potential risks. Advanced machine learning models are being developed to identify synthetic media with increasing accuracy, offering hope for more effective digital verification mechanisms. However, this remains an ongoing technological arms race, with malicious actors continuously developing more sophisticated impersonation techniques.RELATED NEWS

Technology

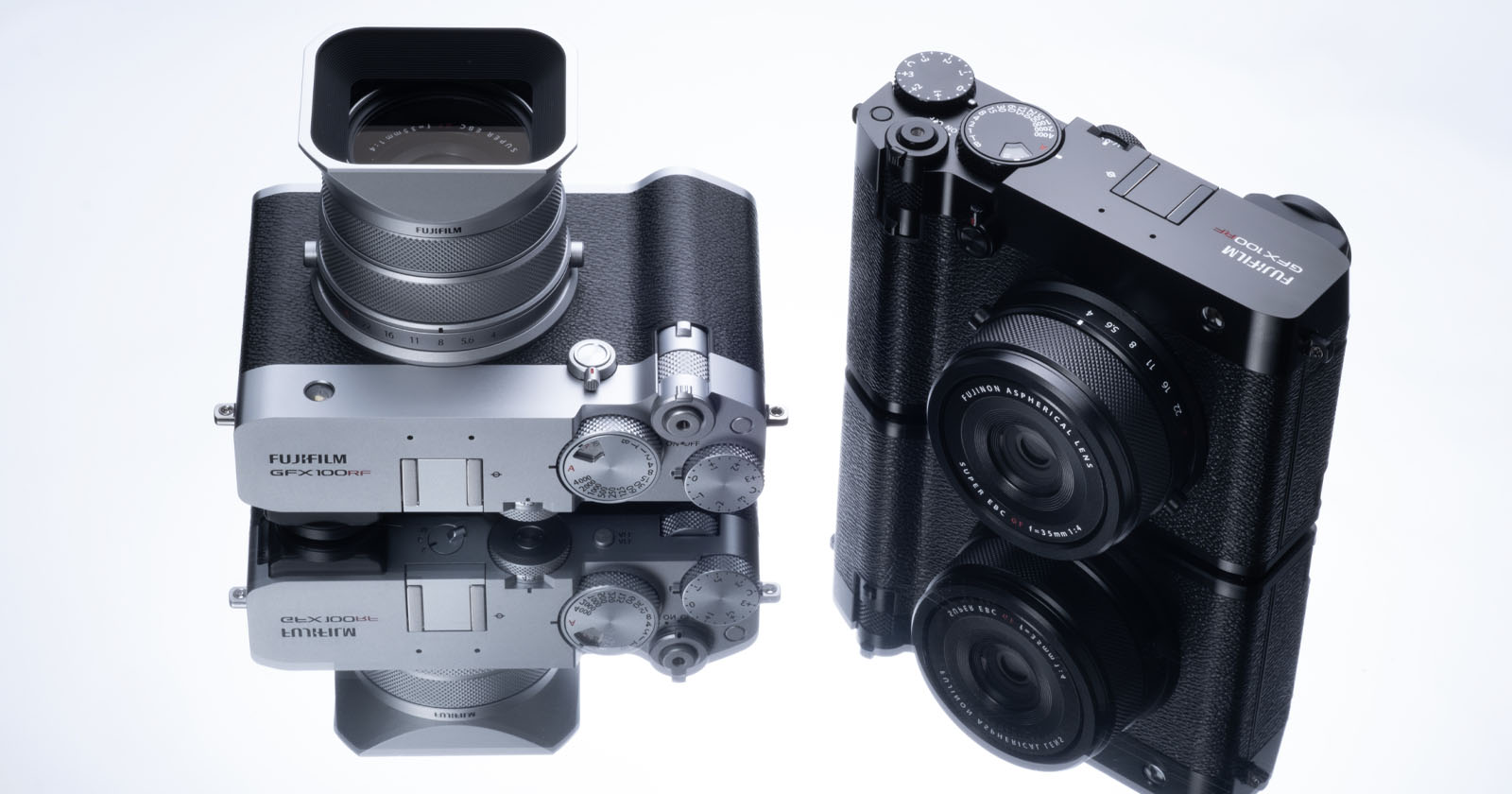

Fujifilm's Latest Marvel: A Medium Format Camera That Breaks All the Rules

2025-03-20 11:00:35

Technology

Breaking: Microsoft's Phi-4 AI Punches Above Its Weight, Challenges Tech Giants with Compact Powerhouse

2025-05-01 03:23:56

Technology

Smart Home Revolution: Alexa Gets an AI Supercharge with Amazon's Latest Breakthrough

2025-02-27 13:02:23